The internet has long been the first port of call for individuals seeking to understand their health concerns. While “Dr. Google” has become a common phrase, often associated with unreliable information and misdiagnosis, a new era of self-diagnosis is emerging, powered by artificial intelligence. AI chatbots, leveraging sophisticated large language models (LLMs) like OpenAI’s ChatGPT, Microsoft’s Bing AI, and Google’s Med-PaLM, are rapidly changing how people approach medical information and preliminary diagnosis. These advanced tools, trained on vast datasets of text, offer a more nuanced and potentially more accurate way to explore health symptoms and concerns compared to traditional search engines. As healthcare systems globally grapple with workforce shortages, the potential for AI chatbots to assist in answering patient queries and even contribute to medical diagnosis is gaining significant attention. Early research indicates that these AI programs surpass the accuracy of simple online searches, suggesting a future where AI chatbots play a crucial role in healthcare delivery.

Alt text: Individual interacting with a medical AI chatbot on a smartphone, representing the growing trend of AI in self-diagnosis.

The Rise of AI-Assisted Medical Diagnosis

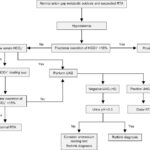

The digital transformation of medicine has been accelerated in recent years, particularly highlighted by the surge in telehealth and online patient-physician communication during the COVID-19 pandemic. The volume of digital messages between patients and healthcare providers saw a dramatic increase, underscoring the growing reliance on digital tools in healthcare. While simpler chatbots have already been implemented for tasks like appointment scheduling and providing basic health information, the advent of LLM-powered chatbots introduces a paradigm shift. These advanced AI systems can engage in more complex interactions, potentially bridging the gap between basic information retrieval and more sophisticated diagnostic assistance.

A groundbreaking study, featured on the preprint server medRxiv, explored the diagnostic capabilities of OpenAI’s GPT-3. Researchers presented the AI with 48 case scenarios described as patient symptoms. Remarkably, GPT-3 included the correct diagnosis within its top three suggestions in 88% of cases. This performance, while slightly below the 96% accuracy rate of physicians using the same prompts, significantly outperformed individuals without medical training, who achieved only 54%. Furthermore, traditional online symptom checkers, as revealed by prior research, only yield a correct diagnosis within the top three possibilities around 51% of the time. This data underscores the enhanced accuracy and potential of AI chatbots in preliminary medical assessment.

Alt text: Chart comparing the diagnostic accuracy rates of AI chatbots, physicians, and traditional online symptom checkers, highlighting AI’s improved performance.

The user-friendliness of AI chatbots further distinguishes them from conventional symptom checkers. Instead of navigating rigid, algorithm-driven interfaces, users can describe their symptoms in natural language, engaging in a conversational exchange with the AI. This conversational interface, mirroring human interaction, is a significant advantage. Moreover, AI chatbots possess the capability to ask clarifying follow-up questions, simulating a doctor-patient interaction to gather more comprehensive information. However, it’s crucial to acknowledge that the accuracy of AI diagnosis is contingent on the clarity and completeness of the user’s input. Vague or poorly articulated symptom descriptions could potentially lead to less accurate diagnostic suggestions.

Navigating the Pitfalls of AI in Medical Diagnosis

Despite the promising advancements, the integration of AI chatbots into medical diagnosis is not without challenges. One significant concern revolves around the potential for misinformation. LLMs learn by predicting the next word in a sequence based on the vast amounts of online text they are trained on. This approach could inadvertently give equal weight to information from credible sources like the CDC and less reliable sources, raising the risk of perpetuating inaccurate or misleading health information. While developers like OpenAI are implementing measures to “pretrain” their models for accuracy and to mitigate “hallucinations”—instances where the AI fabricates information—the risk remains. Disclaimers accompanying these AI tools explicitly advise against using them for diagnosing serious conditions or managing life-threatening situations, highlighting the current limitations and need for caution.

The evolving landscape of online information also presents a challenge. Malicious actors could potentially manipulate online content to skew the information that future iterations of LLMs learn from, potentially injecting misinformation into the AI’s knowledge base. While Microsoft’s Bing AI attempts to address this by linking to sources, even this approach is not foolproof. LLMs have demonstrated the ability to fabricate sources, making it difficult for users to discern the legitimacy of the information provided. Potential solutions include stricter control over the data sources used to train AI models and the implementation of robust fact-checking mechanisms. However, scaling these solutions to match the rapidly growing volume of AI-generated content remains a significant hurdle.

Google’s Med-PaLM adopts a different strategy, drawing upon a curated dataset of real-world patient-provider questions and answers, along with medical licensing exams. Studies evaluating Med-PaLM have shown a high degree of alignment with medical consensus (92.6%), comparable to human clinicians (92.9%). While chatbot responses were noted to sometimes lack completeness compared to human answers, they were also found to be slightly less likely to cause potential harm. The ability of these AI models to pass medical licensing exams further underscores their growing competence in medical knowledge. However, the complexity of real-world medical scenarios goes beyond multiple-choice exams. It requires understanding the nuanced interplay of patient history, social context, and provider expertise, emphasizing that AI is a tool to augment, not replace, human clinical judgment.

The rapid pace of deployment of LLM chatbots in medicine raises concerns about regulatory oversight. The technology is evolving faster than regulatory frameworks can adapt, potentially leading to premature or inadequately vetted implementations in healthcare settings. A measured and phased rollout, starting with clinical research and rigorous testing, is crucial to ensure patient safety and efficacy before widespread adoption.

Addressing Bias and Ensuring Equity in AI-Driven Healthcare

A critical ethical consideration is the potential for AI chatbots to perpetuate existing biases prevalent in medicine and society. LLMs are trained on human-generated data, which inherently reflects societal biases related to race, gender, and other demographics. For instance, documented disparities in pain management for women and racial biases in mental health diagnoses highlight the embedded prejudices within medical systems that AI could inadvertently amplify. Studies have already indicated that AI chatbots may exhibit biases in trust assessment based on perceived race and gender.

While eliminating bias from the internet entirely is an unrealistic goal, proactive measures can be taken to mitigate bias in AI-driven healthcare. Developers can conduct preemptive audits to identify and correct biased outputs from chatbots. Furthermore, user interface design can play a role. Research suggests that presenting AI output as informational rather than prescriptive advice can reduce the likelihood of users acting on biased recommendations.

Diversity within the development teams creating and evaluating medical AI is also essential. A diverse team is better equipped to identify and address potential biases embedded in the technology. However, ongoing monitoring and refinement are crucial, as bias mitigation is a continuous process that needs to adapt to the evolving use of AI systems.

Building patient trust in AI chatbots is paramount for their successful integration into healthcare. Concerns exist regarding whether relying on AI for medical information might erode patient discernment compared to traditional information-seeking methods. The conversational and seemingly empathetic nature of chatbots could also lead to over-trust and the sharing of sensitive personal information, raising privacy concerns. Transparency regarding data collection practices by AI developers is crucial.

Furthermore, patient acceptance of AI as a source of medical information is not guaranteed. Experiences like the Koko mental health app experiment, where users reacted negatively upon discovering they were interacting with an AI, highlight the importance of managing patient expectations and ensuring human oversight. Surveys indicate significant discomfort among Americans regarding healthcare providers relying on AI for diagnosis and treatment recommendations.

However, studies also reveal a nuanced perspective. While people may be wary of AI for complex medical decisions, they are more trusting of AI for simpler queries. Moreover, the ability of AI to provide detailed and patient explanations, contrasting with potentially rushed human interactions, can be beneficial for some patients. Ultimately, the successful implementation of AI chatbots in medical diagnosis likely hinges on a hybrid approach, where AI augments human expertise and patients retain access to human clinicians when needed. Clear pathways for escalation to human doctors and transparent communication about the role of AI in their care are essential.

Alt text: Doctor and patient reviewing medical data on a tablet, illustrating the collaborative potential of AI and human expertise in medical diagnosis.

The future of AI in medical diagnosis is rapidly unfolding. While challenges related to accuracy, bias, privacy, and patient trust need careful consideration, the potential benefits of AI chatbots in improving access to medical information and augmenting diagnostic processes are undeniable. As we move forward, a balanced and ethical approach, prioritizing patient well-being and human oversight, will be crucial to harnessing the transformative power of AI in healthcare. The integration of AI chatbots is not about replacing doctors, but about creating a more accessible, efficient, and informed healthcare system for all.