1. Introduction

Artificial Intelligence (AI) is revolutionizing various fields, and medical diagnostics is no exception. From identifying tumors in medical imaging to detecting ulcers, AI systems are often achieving diagnostic accuracies that rival, and sometimes surpass, those of experienced clinicians [1, 2]. This progress has naturally extended to the complex domain of psychiatric disorders, with a surge in research exploring AI’s potential in diagnosing conditions like major depressive disorder (MDD). A mental disorder, defined as a behavioral or psychological syndrome that causes significant distress or disability [3], affects a substantial portion of the global population. The application of machine learning to predict depression has become a prolific area of study, with hundreds of publications annually and reported average accuracy rates hovering around 80%, with some studies even claiming over 90% accuracy [4]. For instance, researchers utilizing EEG feature transformation and machine learning have achieved an 89.02% accuracy in depression classification, suggesting the feasibility of future portable EEG-based systems for auxiliary depression recognition [5]. The increasing availability of portable and wearable sensors further fuels the enthusiasm for AI-driven depression monitoring in everyday life.

In stark contrast to the promise of AI, the reality of mental health diagnosis in clinical practice reveals significant challenges. Psychiatrists often face heavy workloads, and the inherently subjective nature of diagnosis, relying heavily on personal experience and qualitative evaluations without definitive biomarkers, leads to inconsistencies and inaccuracies. A study in Ethiopia highlighted a concerning misdiagnosis rate of over one-third (39.16%) among patients with severe psychiatric disorders in a specialized setting [6]. Alarmingly, misdiagnosis rates are even higher for non-psychiatrists. Research across primary care clinics in Canada revealed misdiagnosis rates as high as 65.9% for major depressive disorder, and exceeding 85% for conditions like bipolar disorder, panic disorder, generalized anxiety disorder, and social anxiety disorder [7]. The current diagnostic process, heavily reliant on doctor-patient communication and subjective scale analyses, is vulnerable to patient denial, low sensitivity, subjective biases, and overall inaccuracy. Furthermore, a critical shortage of psychiatrists, particularly in developing nations, exacerbates the problem and hinders access to timely and accurate diagnoses. AI-driven mental health diagnosis offers a compelling alternative, promising benefits such as resource efficiency, increased diagnostic speed, large-scale assessment capabilities, and reduced stigma associated with seeking mental health care. The development of objective, automated methods to assist psychiatrists in diagnosing mental disorders is therefore becoming increasingly crucial.

However, despite the compelling narrative and research progress, the widespread adoption of AI in mental health diagnosis remains limited. Why is the clinical translation lagging behind the research advancements? To understand this gap, we must first examine the fundamental principles behind using machine learning for automated diagnosis in this complex field.

2. The Underlying Logic of Machine Learning in Mental Disorder Identification

The broad category of “mental disorder” encompasses a diverse range of conditions with significant heterogeneity. For clarity and focus, we will primarily use depressive disorders, the most prevalent type of mental disorder, as our illustrative example. Extensive research has established discernible differences between individuals with depressive disorders and healthy populations. These differences manifest across various indicators, including biochemical markers like cerebral blood oxygen consumption [8] and neurotransmitter levels [9], electrophysiological signals such as EEG patterns [10], peripheral physiological signals like heart rate and skin conductance [11], and non-verbal behaviors encompassing facial expressions, vocal characteristics, and linguistic patterns [12]. These differentiating features form the basis for training AI classifiers. The core principle is that by feeding these discriminant metrics as input features into machine learning algorithms, it should be possible to develop accurate predictive models for automated depressive disorder diagnosis.

Nonverbal behaviors, particularly facial expressions [13,14], have received significant attention as predictors of depression. Facial expressions are highly visible and are often considered reliable indicators of mood, especially relevant for mood disorders like depressive disorders. While we focus on facial cues as an example, numerous studies explore the application of other cues, as detailed in comprehensive review articles [5,8,15]. Facial expressions, typically categorized into emotions like anger, sadness, joy, surprise, disgust, and fear, are recognized as potentially discriminatory cues for depressive disorder detection. Individuals diagnosed with depressive disorders often exhibit reduced facial expressiveness [16]. Gavrilescu and colleagues proposed a method for determining depression levels by analyzing facial expressions using the Facial Action Coding System, achieving an 87.2% accuracy in depression identification in their experiment [17]. Furthermore, specific facial expression features such as the duration and intensity of spontaneous smiles [18,19], mouth movements [20], and the absence of smiling [21] have also been identified as potentially valuable patterns for depressive disorder detection. Recent advancements in facial expression analysis have even extended to subtle features like pupil changes. For example, research suggests that faster pupillary responses may indicate positive mental health in control groups [22], while depressed individuals may exhibit slower pupil dilation responses under specific conditions [23]. Pupil bias and diameter have been identified as important factors in assessing depression symptoms [24]. Other relevant eye-related features include reduced eye contact [21], gaze direction [19], eyelid activity, and eye movement and blinking patterns [25].

While single-modality approaches, such as relying solely on facial cues, have shown promising results in depressive disorder recognition, the integration of multimodal data is anticipated to further enhance diagnostic accuracy. Combining voice and visual cues, and potentially incorporating physiological information, is expected to improve the robustness of automated diagnosis. Multimodality is indeed a prominent trend in both algorithm development and database design for AI in mental health.

Researchers are leveraging visual, acoustic, verbal, and physiological signals to predict mental disorders. However, to truly understand the challenges and limitations of Ai Diagnosis Mental Health, we must delve deeper into the nature of these disorders themselves. What are the characteristics of mental illnesses that researchers are attempting to predict? What diagnostic processes do clinicians currently employ? And do these factors present unique obstacles for AI in mental health diagnosis, distinct from those encountered in other diagnostic tasks?

To illustrate these challenges, we will continue to use depressive disorders as a case study, exploring the complexities that arise when applying AI to diagnose mental illness. The DSM-5 [26] and ICD-11 [27] are the most authoritative diagnostic manuals globally. However, even the DSM, the most widely used standard, is not without its controversies. We will now examine the inherent features of depressive disorders that pose significant barriers to the effective application of AI in diagnosis.

3. Diagnostic Criteria: A Source of Challenges for AI

One fundamental challenge stems from the very nature of diagnostic criteria for mental disorders. Many diagnostic indicators are rooted in subjective experiences, rely on qualitative descriptions, or are inherently difficult to objectively quantify and standardize. For instance, diagnostic criteria for depressive disorders heavily rely on symptomatology, such as “depressed mood” or “sleep problems.” While extensive research aims to identify physiological bases and biomarkers for mental disorders, currently, there are no clinically validated biomarkers that can definitively confirm a diagnosis of major depressive disorder.

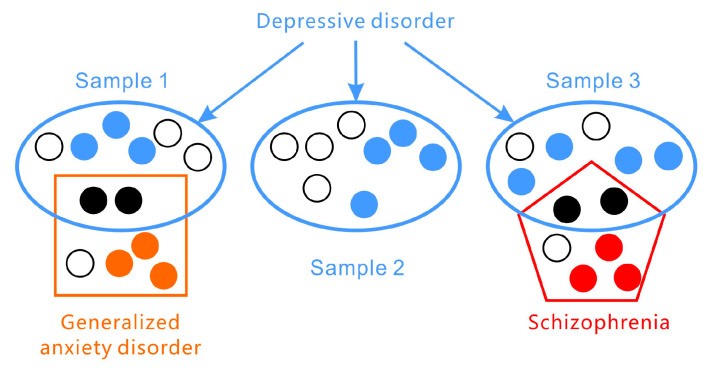

Furthermore, the presentation of symptoms in depressive disorders exhibits significant inter-individual variability (as illustrated in Figure 1). According to the DSM-5 criteria for major depressive disorder, two core symptoms are central: (1) depressed mood for most of the day and/or (2) markedly diminished interest or pleasure in activities. However, a diagnosis requires the presence of at least three or four additional symptoms from a list of seven, meaning that depressive disorders are characterized by a lack of consistent symptom profiles and significant variation across individuals. The PHQ-9 (Patient Health Questionnaire-9), a widely used screening tool, assesses the nine DSM-5 criteria. Another common scale, the Hamilton Rating Scale for Depression (HAMD), does not focus on specific symptom types but rather on broader categories like insomnia, low mood, agitation, anxiety, and weight changes. Symptom presentation also varies across developmental stages; for example, in adolescents, depressive symptoms may manifest as irritability rather than persistent low mood. Mathematical analyses suggest that there are at least 1497 unique symptom profiles that could qualify for a depression diagnosis [28]. In some instances, patients sharing the same diagnosis may not exhibit any overlapping symptoms [29].

Figure 1.

Figure 1: The heterogeneous nature of depressive disorders is highlighted by the wide variation in symptom presentation and frequent co-occurrence with other disorders like generalized anxiety disorder and schizophrenia.

Adding to the complexity, depressive disorders are not a monolithic entity but encompass a spectrum of conditions with various subcategories and variants, such as disruptive mood dysregulation disorder, major depressive disorder, and persistent depressive disorder (dysthymia).

Comorbidity, the presence of multiple disorders simultaneously, is highly prevalent in mental health (refer again to Figure 1). Depressive disorders frequently co-occur with anxiety disorders and personality disorders and can be easily confused with bipolar disorder or other mental illnesses. Symptom overlap, such as sleep or appetite disturbances, between different conditions necessitates careful differential diagnosis by clinicians. This complexity introduces opportunities for subjective bias in clinical judgment [30,31,32,33,34].

Furthermore, diagnostic criteria emphasize the functional impact of symptoms. According to the DSM, symptoms must cause clinically significant distress or impairment in social, occupational, or other crucial areas of functioning, considering cultural context.

The dynamic and episodic nature of symptoms presents another challenge. Major depressive disorder is not a continuous state but rather characterized by episodes. Symptom severity and presentation can fluctuate significantly over time. For example, an individual might experience heightened symptoms in the morning on certain days, followed by periods of prolonged depression lasting weeks.

Finally, depressive disorders arise from intricate interactions between genetic predispositions and environmental, physiological, and sociocultural factors. The precise pathogenesis of depressive disorders remains incompletely understood. Depression is not solely a neurophysiological issue but rather a complex interplay between genetic vulnerability and environmental influences [35]. The combination of biological factors, family and environmental stressors, and individual vulnerabilities plays a critical role in the onset of major depressive disorder [36]. This multifaceted etiology contributes to the wide range of subjective experiences and behavioral and speech characteristics observed in individuals with depression, further complicating ai diagnosis mental health.

4. Limitations of Standard Diagnostic Approaches

Given the inherent variability and complexities of depressive disorders, how do clinicians navigate the diagnostic process? The absence of unique and definitive clinical indicators makes diagnosing depressive disorders a time-consuming and inherently subjective endeavor [37]. Routine assessments typically involve self-report scales and clinician-administered interviews, both primarily guided by the DSM and ICD criteria. Self-rating scales, such as the PHQ-9, Zung’s Self-rating Depression Scale, and the Beck Depression Inventory, offer a convenient and efficient method for assessing depressive symptoms. These scales are primarily used for screening and to provide supplementary information for physicians’ diagnoses. Self-report scales have been extensively used in research, demonstrating specificity and sensitivity ranging from 80% to 90%, although they are not without limitations [38]. In addition to self-report measures, clinician-rated scales like the Hamilton Rating Scale for Depression (HAMD) [39] are frequently used to assist in clinical diagnosis.

Clinical interviews are considered more thorough and accurate but are also more time-intensive and resource-demanding. Doctor-conducted interview-based assessments often represent the final stage in diagnostic decision-making. Diagnosing depressive disorders is a complex process influenced by factors such as the patient’s education level, cognitive abilities, honesty in reporting symptoms, and crucially, the clinician’s experience and motivation. Accurate assessment of depression severity requires comprehensive information gathering and rigorous clinical training [40]. While biological markers such as low serotonin levels [41], neurotransmitter dysfunction [42], and brain structural abnormalities [43] have been investigated as potential indicators of depression, they are not currently used as definitive diagnostic tools in routine clinical practice.

The complexity of depressive disorders necessitates a holistic approach to diagnosis. Depressive disorders are not merely mood disturbances but also encompass sociocultural dimensions, often leading to significant impairments in social functioning. This multifaceted nature may contribute to the high misdiagnosis rates observed in clinical settings. It compels us to critically evaluate the current depression datasets used in AI research and question the representativeness and quality of these samples. Can the objective features captured in these datasets reliably predict depressive disorders? And are the annotations used to train AI models truly valid? These questions are crucial for understanding the limitations and potential of ai diagnosis mental health.

5. The Logical Fallacy in Mental Disorder Diagnosis and its Implications for AI

A fundamental challenge in mental disorder diagnosis, often overlooked, is the inherent logical fallacy embedded within the diagnostic process itself. When a clinician diagnoses an individual with a depressive disorder, they rely on reported symptoms, such as persistent low mood and suicidal ideation. The underlying assumption is that these symptoms are caused by the depressive disorder. This reflects the premise of conditional reasoning: “If p then q,” where ‘p’ represents depressive disorder and ‘q’ represents depressive symptoms. However, diagnosing based on symptoms involves “affirming the consequent”: “If q then p.” This form of reasoning, “If depressive symptom then depressive disorder,” is a logical fallacy (as illustrated in Table 1).

Table 1.

The four formats of conditional reasoning.

| Logical Format | Diagnosis | Logical Value |

|---|---|---|

| DD *→DS ** Modus Ponens | If depressive disorder then depressive symptom | Valid |

| Non-DD→non-DS Denying the Antecedent | If no depressive disorder then no depressive symptom | Invalid |

| DS→DD Affirming the Consequent | If depressive symptom then depressive disorder | Invalid |

| Non-DS→non-DD Modus Tollens | If no depressive symptom then no depressive disorder | Valid |

* DD = depressive disorder; ** DS = depressive symptom.

Depressive disorders are essentially labels assigned to clusters of symptoms. This means that the symptoms themselves do not inherently explain why a person has a depressive disorder, nor does the disorder label fully explain the occurrence of the symptoms. Therefore, attempting to definitively identify a depressive disorder solely based on presented symptoms is logically problematic. For example, an individual might experience depressive symptoms due to recent significant negative life events. If these stressors resolve, the depressive mood may also subside. Conversely, depressive symptoms could be secondary to another underlying psychological disorder, such as a personality disorder.

The imprecise and potentially circular relationship between depressive disorders and their associated symptom sets creates significant challenges for diagnosis. If AI systems are trained to mimic current clinical diagnostic practices, they will inevitably inherit and potentially amplify this inherent logical fallacy. This highlights a critical limitation in relying solely on symptom-based data for ai diagnosis mental health. A deeper understanding of the etiological factors and underlying mechanisms of mental disorders is necessary to move beyond symptom-based diagnosis and develop more robust and valid AI diagnostic tools.

6. Dataset Limitations: Hindering Progress in AI for Mental Health

Computer scientists dedicate significant effort to developing computationally efficient and robust AI models using specific datasets. Consequently, the accuracy and generalizability of depressive disorder recognition using AI are heavily dependent on the quality of the training data, including the representativeness of the samples and the validity of their annotations. We will now examine common datasets used in depression recognition research and discuss the limitations arising from the characteristics of these datasets and the inherent challenges of diagnosing mental disorders.

6.1. Common Datasets for Depression Recognition

Many datasets used in research are created for specific studies and are not publicly available for broader depression recognition research. Only a limited number of datasets have been released publicly for this purpose. Notable examples include the AVEC (The Continuous Audio/Visual Emotion and Depression Recognition Challenge) datasets from 2013 and 2014, and the DAIC-WOZ (Distress Analysis Interview Corpus-Wizard of Oz) dataset.

The AVEC2013 and AVEC2014 datasets are subsets of the larger Audio-Visual Depression Language Corpus. AVEC2013 [44] comprises 340 videos recorded in German, where participants engaged in human-computer interaction tasks in front of a webcam and microphone. The video recordings include free speech, reading, singing, and picture-based association tasks. The Beck Depression Inventory II (BDI-II) [45] was used to annotate the depression severity score for each participant based on their interview records. AVEC2014 [46] is a smaller subset of AVEC2013, consisting of 300 German-language videos with shorter clip durations. The DAIC-WOZ dataset [47], annotated using the PHQ-8 scale [48], was used in the AVEC challenges of 2016 and 2017. DAIC-WOZ employs a virtual interviewer with strictly controlled emotional expression during the interview. This dataset collects audio, video, and deep sensor modalities, including galvanic skin response, electrocardiogram data, and respiratory information. E-DAIC, an extended version of DAIC-WOZ, contains data from semi-clinical interviews designed to aid in diagnosing psychological distress conditions like anxiety and depression [15]. The E-DAIC dataset includes 163 development samples, 56 training samples, and 56 test samples, with participant data including age, gender, and labeled PHQ-8 scores. This dataset was utilized in the AVEC2019 challenge [21].

6.2. Ecological Validity and Representativeness of Recorded Data

A significant limitation of many existing datasets is their lack of ecological validity. Many samples consist of videos of individuals in artificial interview settings, interacting with clinicians or virtual interviewers. While some datasets incorporate multimodal information like depression scale scores and physiological data [15], the core data often comes from these structured interview scenarios.

It is crucial to recognize that this interview context is a highly specific and potentially artificial situation. A patient seeking diagnosis in an outpatient clinic is often consciously presenting their symptoms to communicate their distress to the clinician. They may emphasize their difficulties, express sadness, and project despair in their voice and demeanor, which might not accurately reflect their typical daily state. As previously discussed, major depressive disorder is episodic, and symptom presentation can vary considerably. Patients in interview settings may be consciously or unconsciously “performing” a depressed state, which may differ significantly from their behavior in naturalistic settings. Therefore, models trained on these interview-based datasets may only be reliably applicable to similar contrived situations where individuals are actively demonstrating their symptoms. Furthermore, the structured nature of interview questions and the controlled environment limit the generalizability to real-world, noisy conditions. Factors like group demographics, language, and cultural background further restrict the representativeness of these datasets.

6.3. Small Sample Sizes: A Persistent Constraint

Across available datasets, a consistent limitation is the relatively small sample size. Ethical considerations and the sensitive nature of mental health data acquisition constrain the number of participants in these studies. The inherent challenges in collecting and sharing sensitive data related to mental health, coupled with ethical review board requirements, often limit the scale of these datasets.

Furthermore, heterogeneity across datasets poses a significant challenge for establishing cross-dataset validity and generalizability. Variations in imaging data acquisition protocols, scanning parameters, and data processing methods hinder the direct comparison and pooling of data from different sources. These factors collectively make it difficult to draw broad, generalizable conclusions based on the results obtained from individual datasets for ai diagnosis mental health.

6.4. Oversimplified and Limited Diagnostic Categories

Many datasets simplify the complex reality of mental disorders by categorizing samples into binary classes: “depressed” versus “healthy.” This binary classification inherently introduces a 50% chance level of accuracy by random guessing alone. While the AVEC series utilizes a continuous BDI score, it still primarily focuses on the dimension of depression severity, effectively selecting for a spectrum within a single diagnostic category. This approach is fundamentally inconsistent with the complexities of real-world clinical settings. Outpatients present with a wide range of diverse problems and symptom profiles that are not easily categorized. Symptoms across different mental disorders can be overlapping, and diagnostic decisions based solely on DSM criteria in these complex cases become extremely challenging. The issue of comorbidity further complicates matters. Clinicians must often determine the primary or dominant disorder in cases of comorbidity, as this significantly impacts treatment strategies. For example, differentiating between depression with comorbid schizophrenia and schizophrenia with secondary depression is crucial for appropriate medication management.

The reliance on artificially constructed datasets with limited sample sizes, lacking ecological validity, and employing simplified diagnostic categories raises serious questions about the true clinical utility of “AI diagnosis” in mental health. The seemingly high accuracy rates reported in research settings may be, in part, a consequence of these dataset limitations, potentially representing a logical paradox and a form of self-fulfilling prophecy. Consequently, the performance of AI diagnostic models trained on these datasets often degrades significantly when confronted with ecologically valid clinical data.

7. Annotation Challenges: The Foundation of AI Learning

7.1. Validity of Annotations in Mental Health Datasets

Data annotations serve as the bedrock for training and evaluating AI models. However, the validity of annotations in many mental health datasets is questionable. In the AVEC2013 challenge, for example, participants were tasked with predicting the level of self-reported depression, as indicated by the BDI, for each experimental session. This annotation approach is difficult for clinicians to interpret. An individual’s emotional state is often concealed or subtly expressed, even without conscious effort to suppress it. Scoring a patient’s depression level based solely on observing their performance in a recorded session, using a self-report scale like the BDI, is inherently problematic. The BDI contains numerous items that are based on subjective internal experiences not directly observable by others. Furthermore, individuals with depression do not consistently exhibit a depressed state. Symptoms may be most pronounced during acute episodes, while at other times, outward signs of depression might be subtle or absent to an observer.

The validity of annotations is paramount for the practical applicability of AI models. If the annotations themselves are not reliable representations of the underlying construct (e.g., depression severity), models trained on these annotations will likely be of limited clinical use. The models may learn to predict the annotation score, but not necessarily the actual clinical diagnosis or the patient’s true mental state.

7.2. Bridging the Gap Between Objective Measures and Subjective Experiences

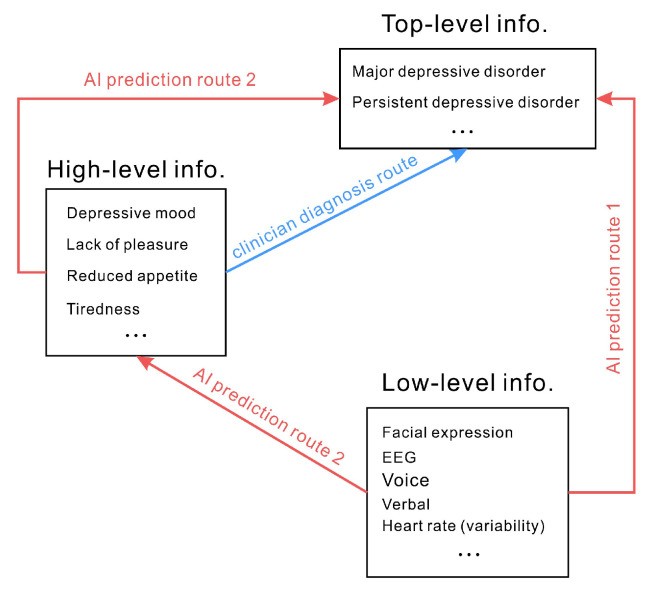

Diagnostic criteria for depressive disorders often rely on subjective experiences and qualitative descriptions – representing high-level information. In contrast, AI models are typically trained on objectively measured signals and behavioral cues – low-level information. Predicting depression by analyzing physiological signals or facial expressions implies an assumption that objectively measurable signals can directly map to subjective feelings and qualitative descriptions. In essence, it assumes that low-level information can directly predict top-level constructs like “depression” (as depicted in Figure 2, route 1). For example, the inference that “less smiling indicates depression” and “depressed mood signifies depressive disorder” leads to the conclusion that depression can be directly predicted by the frequency of smiling. However, the relationship between smiling and emotional state is not always straightforward. Research has extensively documented the inconsistencies and complexities in the link between facial expressions and underlying emotions [49]. It is inaccurate to assert that smiling frequency directly predicts the presence of a depressive disorder. Similarly, physiological indicators like heart rate, blood oxygen level, and skin conductance, while potentially correlated with emotional states, do not have a clear, direct, and specific relationship with high or low mood, and thus are not directly indicative of depressive disorders. Using verbal and physical indicators to infer a person’s mood or the presence of a depressive disorder is a complex and often unreliable process. Therefore, a fundamental challenge in ai diagnosis mental health is that many objectively measured signals may lack a direct or even indirect, consistent relationship with the complex construct of depressive disorders.

Figure 2.

Figure 2: Diagnostic pathways in mental health. Clinician diagnosis relies on subjective experiences and qualitative descriptions (high-level information). AI often attempts to directly predict top-level information (e.g., depression) from low-level information (e.g., facial expressions) via route 1. An alternative, less common approach (route 2) involves AI predicting intermediate high-level information (e.g., emotions) before inferring top-level diagnoses.

Figure 2 also illustrates a less common, alternative approach (route 2). This route involves AI models first predicting intermediate-level, high-level information, such as emotional states, from low-level signals. Then, these predicted emotional states are used to infer top-level diagnostic categories like depressive disorders. This two-step approach may offer a more nuanced and potentially more valid pathway for ai diagnosis mental health, as it attempts to bridge the gap between objective measures and subjective experiences in a more structured manner. However, even this approach still faces the challenges of accurately and reliably predicting subjective emotional states from objective signals.

8. Multimodality: A Panacea or Another Layer of Complexity?

Many researchers believe that the limitations of single-modality approaches can be overcome by integrating multiple sources of information through multimodality. The rationale is that while a single modality may not consistently map to subjective experiences, combining multiple modalities could capture a more comprehensive and robust representation of the underlying mental state. For example, facial expressions might be informative for individual A, vocal cues for individual B, and heart rate variability for individual C. In such scenarios, multimodality could potentially provide the key features required to accurately predict depressive disorders, accounting for individual variability. However, the success of multimodality hinges on the assumption that the signals are genuinely valid and provide complementary, rather than conflicting, information.

In reality, multimodal information can also introduce noise and inconsistencies. For instance, individuals experiencing a depressive episode exhibit diverse symptom presentations. Some may become irritable and agitated, while others become withdrawn and apathetic, leading to vastly different levels of emotional expressiveness across modalities. If an AI prediction model is to effectively adapt to variations in gender, age, personality traits, and individual pathways to depression, the required sample size for training would be enormous, and the practical feasibility would be extremely low. Furthermore, simply combining multiple noisy or weakly informative modalities does not guarantee improved diagnostic accuracy. Careful selection of relevant modalities, sophisticated fusion techniques, and robust validation strategies are crucial for realizing the potential benefits of multimodality in ai diagnosis mental health. Without these considerations, multimodality may simply add another layer of complexity without necessarily improving the accuracy or clinical utility of AI diagnostic systems.

9. Conclusions: The Path Forward for AI in Mental Health

The inherent subjectivity in mental illness diagnosis underscores the appeal of AI-driven approaches, which promise objectivity by leveraging large datasets and algorithmic analysis, free from human biases and subjective interpretations. While the potential of ai diagnosis mental health is undeniable and its role is expected to grow in the future, significant hurdles remain.

These challenges are partly technical, stemming from data limitations such as dataset size, ecological validity, and annotation quality. These technical obstacles are potentially addressable through advancements in data acquisition, annotation methodologies, and algorithm development. However, a more fundamental set of challenges arises from the very nature of mental illness diagnosis itself. Current diagnostic criteria often rely on symptom-based labels and subjective, qualitative descriptions. AI training data, conversely, is primarily based on low-level, objectively measurable information. The dominant approach of directly predicting high-level diagnostic categories from low-level signals, without fully accounting for the complexities of mental disorders and the limitations of current diagnostic paradigms, may lead to inherent flaws in AI system design.

Ultimately, the central challenge in ai diagnosis mental health is not solely technical or data-centric, but rather deeply intertwined with our fundamental understanding of mental disorders. Advancing AI in this domain requires not only technical innovation but also a paradigm shift in how we conceptualize, define, and diagnose mental illnesses. Future research must focus on developing more nuanced and valid diagnostic frameworks, integrating multi-level data (from biological to social factors), and addressing the ethical and practical considerations of implementing AI in mental health care. Only through a holistic and interdisciplinary approach can we unlock the true potential of AI to improve mental health diagnosis and ultimately, patient care.

Author Contributions

Conceptualization, W.-J.Y. and K.J.; writing, Q.-N.R. and W.-J.Y. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by the Wenzhou Science and Technology Project of Zhejiang, China (G20210027) and the Zhejiang Medical and Health Science and Technology Project (2023RC273).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

1 Dorbala, S.; Ananthakrishnan, L. Artificial intelligence in medical imaging. Diagnostics 2023, 13, 2.

2 Li, Z.; Liu, J.; Zhao, Z.; Chen, Q.; Zhang, Y.; Jiang, Y.; Zhang, Y.; Liu, L.; Wang, S.; Zhang, J.; et al. Artificial intelligence in diagnosis of digestive system diseases. Diagnostics 2023, 13, 147.

3 American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Publishing: Washington, DC, USA, 2013.

4 Gaur, P.; Al Jabery, M.; Thapa, D.; Rawat, D.; Bhattarai, P.; Aryal, K.K.; Sadaoui, S.; Hamad, A.I.; Dawoud, D.; Younes, M.I.; et al. Artificial intelligence in depression screening and diagnosis: A systematic review. PLoS ONE 2023, 18, e0289578.

5 Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M.; Gertych, A.; San Tan, R. Applications of deep convolutional neural network-based automated diagnosis of brain disorders. Neurocomputing 2019, 328, 9–29.

6 Fekadu, A.; Medhin, G.; Alem, A.; Shibre, T.; Prince, M.; Gulliver, P.;надворных, В.И. Misdiagnosis of severe mental illness in ethiopia: A prospective cohort study. BMC Psychiatry 2009, 9, 52.

7 Pies, R.W. Misdiagnosis of bipolar disorder: Frequency and clinical consequences. J. Clin. Psychiatry 2006, 67, 9–16.

8 Goldschmidt, A.B.; Rossetti, M.G.; Radua, J.; Rubia, K.; Mataix-Cols, D. Neuroimaging of the orbitofrontal cortex in obsessive-compulsive disorder: A systematic review and meta-analysis. Neurosci. Biobehav. Rev. 2013, 37, 14–35.

9 Belmaker, R.H.; Agam, G. Major depressive disorder. N. Engl. J. Med. 2008, 358, 55–68.

10 Murakami, H.; Okada, G.; Yoshimura, R.; Onoda, K.; Yamawaki, S.; Kishimoto, T.; Ryota, N.; Kasai, K.; Yamasue, H. Classification of depression and schizophrenia using resting-state fmri: A systematic review and meta-analysis. Neurosci. Biobehav. Rev. 2017, 75, 199–212.

11 Kim, J.E.; Kim, S.H.; Kim, J.H.; Park, J.Y.; Kim, J.J.; Jung, W.H.; Choi, J.S.; Kim, K.W.; Shin, Y.C.; Kim, J.I. Peripheral physiological responses to emotional stimuli in patients with major depressive disorder and healthy controls. J. Affect. Disord. 2014, 168, 136–142.

12 Cummins, N.; Burnham, D.; Schiller, N.O.; André, E. A review of depression and speech: Acoustic and prosodic characteristics. Speech Commun. 2015, 60, 10–25.

13 Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 1971, 17, 124–129.

14 Ekman, P. Facial expressions of emotion: New findings, new questions. Psychol. Sci. 1992, 3, 34–38.

15 Valstar, M.; Jiang, B.; Almaev, T.; Schuller, B.; Heylen, D.; Campbell, M.; Stratou, G.; Arsic, D.; Pantic, M. Avec 2016 multimodal affect recognition workshop and challenge. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge (AVEC’16), Amsterdam, The Netherlands, 16 October 2016; pp. 3–10.

16 Sloan, D.M.; Strauss, M.E.; Wisner, K.L.; Hayes, A.M. Diminished facial expression in depressed patients. Am. J. Psychiatry 2001, 158, 1009–1011.

17 Gavrilescu, M.; Vizireanu, N.; Ciobanu, A.M.; Strungaru, R. Facial expression analysis for depression level determination. In Proceedings of the 2014 9th International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; Volume 1, pp. 682–689.

18 Ruch, W.; Ekman, P. The expressive display of surprise. J. Pers. Soc. Psychol. 2001, 80, 286–295.

19 El Kaliouby, R.; Teeters, A.; Picard, R.W. An exploratory study of automatic recognition of posed and spontaneous smiles. In Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2006; pp. 615–622.

20 Cohn, J.F.; Ambadar, Z.; Ekman, P. Observer-based measurement of facial expression with the facial action coding system. In The Handbook of Emotion Elicitation and Assessment; Coan, J.A., Allen, J.J.B., Eds.; Oxford University Press: New York, NY, USA, 2007; pp. 203–221.

21 Rienks, R.; Westerdijk, H.; de Miranda Azevedo, R.; Gootjes, L.; van den Broek, D.; de Jong, J.; Vlasman, R.; van Vugt, M.; Streefkerk, P.; van der Gaag, M.; et al. The avec 2019 workshop and challenge: Cross-cultural affect recognition and depression detection. In Proceedings of the 9th International Workshop on Audio/Visual Emotion Challenge and Affective Behavior Analytics, Nice, France, 21–25 October 2019; pp. 3–10.

22 Siegle, G.J.; Ichikawa, N.; Steinhauer, S.R.; Thase, M.E. Blink before you think: Pupillary and frontal measures of the cognitive modulation of emotion. Biol. Psychiatry 2008, 64, 863–869.

23 Abercrombie, H.C.; Chambers, C.D.; Geyer, M.A.; Swerdlow, N.R. Acoustic startle response in subjects with major depression: Sensorimotor gating and prepulse inhibition. Biol. Psychiatry 2001, 50, 724–731.

24 Harrison, F.E.; Gray, L.M.; Norris, K.; Lewis, P.A.; Cornish, K.; Vitaro, F.; Tremblay, R.E.; Day, J.U. Pupil bias and diameter predict symptoms of depression in adolescence. J. Affect. Disord. 2017, 218, 334–341.

25 Schneider, M.; Leweke, F.M.; Weber, M.; Radermacher, M.; Klosterkötter, J.; Ruhrmann, S. Reduced eye blink rates in subjects at risk for psychosis indicate dopaminergic hyperactivity and predict transition to psychosis. J. Psychiatr. Res. 2008, 42, 12–17.

26 Regier, D.A.; Kuhl, E.A.; Kupfer, D.J. The dsm-5: Classification and criteria changes. World Psychiatry 2013, 12, 92–98.

27 World Health Organization. International Statistical Classification of Diseases and Related Health Problems (ICD-11); World Health Organization: Geneva, Switzerland, 2018.

28 Zimmerman, M.; Chelminski, I.; Posternak, M.A. Implications of dimensional models of personality for the diagnostic and statistical manual of mental disorders, fifth edition. Dialogues Clin. Neurosci. 2011, 13, 317–325.

29 Kendler, K.S.; First, M.B.; Klein, D.N.; Kocsis, J.H.; Narrow, W.E.; Nelson, J.C.; Siris, S.G.; Verdoux, H.; Weissman, M.M.; Hyman, S.E. What is a valid psychiatric diagnosis? Arch. Gen. Psychiatry 2011, 68, 1169–1177.

30 Phillips, M.L.; Swartz, H.A. A critical appraisal of neuroimaging studies of bipolar disorder: Toward a research agenda for the next decade. Biol. Psychiatry 2014, 75, 336–343.

31 Phillips, M.L.; Grande, I.; Gruber, O.; Suppes, T.J. Neurobiology of bipolar disorder: Toward an integrated model. Lancet Psychiatry 2015, 2, 636–648.

32 Savitz, J.; Price, J.L. The human brain in major depressive disorder. Prog. Brain Res. 2010, 186, 219–243.

33 Mayberg, H.S. Deep brain stimulation for treatment-resistant depression. Neurosurg. Clin. N. Am. 2003, 14, 549–563.

34 Drevets, W.C.; Price, J.L.; Furey, M.L. Neuroimaging and neuropathological studies of depression: Implications for the cognitive-emotional features of mood disorders. Ann. N. Y. Acad. Sci. 2008, 1121, 431–457.

35 Caspi, A.; Moffitt, T.E. Gene-environment interactions in psychiatry: Present and future. Nat. Rev. Neurosci. 2006, 7, 583–590.

36 Hammen, C.L. Stress and depression. Annu. Rev. Clin. Psychol. 2005, 1, 293–319.

37 Zimmerman, M. Why it is important to study the reliability of psychiatric diagnosis. World Psychiatry 2013, 12, 183–184.

38 Santor, D.A.; Coyne, J.C.; Mathew, R.J. Diagnostic screening scales: Scale development and validation. J. Consult. Clin. Psychol. 1998, 66, 228–239.

39 Hamilton, M. A rating scale for depression. J. Neurol. Neurosurg. Psychiatry 1960, 23, 56–62.

40 First, M.B. Clinical utility in the dsm-5 and icd-11: Introduction to the special section. Int. J. Clin. Health Psychol. 2015, 15, 83–86.

41 Cowen, P.J.; Browning, M. What has serotonin to do with depression? World Psychiatry 2015, 14, 158–160.

42 Krishnan, K.R. Neurocircuitry of mood disorders. Biol. Psychiatry 2002, 52, 489–499.

43 Videbech, P.; Ravnkilde, B. Hippocampal volume and depression: A meta-analysis of mri studies. Am. J. Psychiatry 2004, 161, 1957–1966.

44 Valstar, M.; McKeown, G.; Healy, G.; Snedeker, J.; Pantic, M. Avec 2013: Audio-visual emotion challenge. In Proceedings of the 3rd International Workshop on Audio-Visual Emotion Challenge, Nice, France, 21–25 October 2013; pp. 3–10.

45 Beck, A.T.; Steer, R.A.; Brown, G.K. Beck Depression Inventory Manual, 2nd ed.; Psychological Corporation: San Antonio, TX, USA, 1996.

46 Valstar, M.; Pantic, M.; Caridakis, G.; McKeown, G.; Röcke, C.; Schuller, B. Avec 2014—2014 audio/visual emotion challenge and workshop. In Proceedings of the 4th International Workshop on Audio-Visual Emotion Challenge (AVEC’14), Brisbane, Australia, 26–31 October 2014; pp. 3–10.

47 Ringeval, F.; Valstar, M.; Gratch, J.; Schuller, B.; Jan, S.R.; Cumbo, M.; Lalanne, D.; Cohn, J.F.; Pantic, M. Avec 2017 workshop and challenge: Emotion recognition and depression detection in dyadic interactions. In Proceedings of the 7th International Workshop on Audio/Visual Emotion Challenge (AVEC’17), Bretton Woods, NH, USA, 23–27 October 2017; pp. 3–10.

48 Kroenke, K.K.; Spitzer, R.L.; Williams, J.B. The phq-9: Validity of a brief depression severity measure. J. Gen. Intern. Med. 2001, 16, 606–613.

49 Russell, J.A. Is there universal recognition of emotion from facial expression? A review of cross-cultural studies. Psychol. Bull. 1994, 115, 102–141.